AI powers a better way to diagnose malaria

CZ Biohub San Francisco’s Remoscope shows promise in clinical study

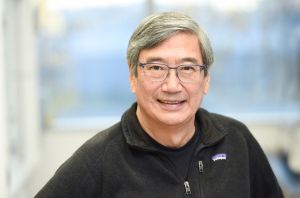

Brian Hie

In the last year, artificial intelligence–based language models, like the now-famous ChatGPT, have made a splash in the public consciousness. Such programs, which work by predicting the most likely next word in a sentence, have gained notoriety for their ability to produce coherent written works and engage in human-like conversation. Some medical researchers, however, took note of the technology for a different reason: they wondered if language models could be tweaked to make predictions about the alphabet-like codes, such as the string of “letters” comprising the human genome, that pervade biology.

In recent months, Stanford Science Fellow Brian Hie and his colleagues have demonstrated that so-called protein language models can indeed make such predictions and can be a critical tool in advancing medicine. Working as a visiting researcher at Meta AI and a postdoctoral scholar with Stanford biochemist Peter Kim, a senior investigator at CZ Biohub San Francisco, Hie leveraged a model that, instead of looking at words from a human language, focuses on the 20 amino acids that string together in varying combinations to form all proteins in our bodies.

In two recent studies, Hie has shown protein language models can detect complex patterns in how these amino acid sequences change over time, yielding insights into evolution, disease outbreaks, and drug development that have eluded scientists thus far.

“There’s information in this data that we haven’t picked up before because we don’t ‘speak amino acid,’” Hie says. “But with this technology, we don’t have to. This approach lets us access information that’s been encoded by nature in millions of amino acid sequences and apply it to our needs.”

Whether they are found in viruses, plants, or human beings, proteins evolve over time via changes to the amino acid sequences that comprise them. Studying how these changes come about is of fundamental interest to scientists investigating such questions as how life on Earth arose, which species came before others, and how pathogens give rise to new strains as they move through communities.

But efforts to reconstruct the evolutionary histories of proteins — a field known as protein phylogenetics — can be slow and have historically been constrained by computational limits. Hie and his colleagues reasoned that applying language models to protein evolution data might provide a powerful new approach.

“Current phylogenetic tree algorithms, for example, go back to the late 90s or early 2000s,” says Hie. “We wanted to reimagine phylogenetic analysis with modern tools, because there has been limited innovation in phylogenetic methods in decades.”

To do so, the team harnessed a model that evaluates the probability that amino acid mutations will occur in a protein sequence with the passage of time. They fed this model actual sequences of viral proteins collected by researchers in the course of tracking disease transmission in patients. Based on the assumption that sequence changes less likely to show up would have taken more time to arise, the scientists could use the output of the model to calculate the order in which the sequences in their data evolved. As reported in the journal Cell Systems, they found that the predictions made by the model closely matched the timeframes in which the sequences were collected in real life, demonstrating that the model could be used to predict how viruses might change across an outbreak.

Peter Kim (Credit: Barbara Ries)

The model achieved similar success in predicting the order of species evolution when given sequences of common proteins, for example one called cytochrome c, which is found in mammals, fungi, plants, and other species.

“The results suggest that you can actually learn the plausible mutations that nature has used to improve evolution,” says senior author Kim. “It leads to the speculation that the mutations in proteins that evolution chooses to test are not random and it could be that this is telling us something really fundamental about how evolution works.”

Before their study had even been finalized, Hie and Kim began to think about how this work might be applied to medicine, in particular to optimizing the development of therapeutic antibodies. Now the fastest-growing class of drugs, these antibodies allow physicians to precisely target specific troublemaking proteins inside our bodies and have proved effective in fighting off foreign pathogens, cancerous cells, and even in shutting down the overzealous immune responses that lead to autoimmune diseases like rheumatoid arthritis and multiple sclerosis.

Therapeutic antibodies work by the same principles as those in our immune system, which evolve faster than any other proteins — transforming over the span of days to weeks to be able to fight off new invaders. They work by fitting like puzzle pieces to specific proteins found on pathogens, telling our bodies where to mount an attack once they’ve snapped into place.

But our bodies don’t get the puzzle pieces quite right on their first try. Instead, new antibodies undergo a process of “directed evolution” — our bodies continuously mutate and test them until they get a result that fits snugly with the offending target. Researchers designing antibody drugs mimic this process in the lab, but the work is tedious and resource intensive, which contributes to the high cost of antibody drugs for patients. Hie and his colleagues wondered if protein language models could analyze how antibodies have evolved in the past to propose new options without the arduous trial-and-error process that scientists currently depend on.

To find out, the team selected seven different antibodies known to target flu viruses, coronaviruses, or ebolavirus, and fed their sequences into their protein language model. Though the model contained no information about how antibodies work and did not “know” what kinds of proteins it was working on, it produced various suggestions for how the antibodies could be improved through evolution.

As described in a paper published in Nature Biotechnology, when the team created and tested those antibodies in the lab, they found that in all cases the new antibodies did a better job at targeting their respective viruses. In the case of an ebolavirus antibody from a patient who had recently been exposed to the virus, the model created a new version of the antibody that was 160 times better at targeting ebolavirus. This antibody, and many other suggested antibodies, were generated by the model literally in seconds instead of the weeks or more required by traditional methods.

“This technology should be added to the toolkit of everyone working to make antibodies,” says Kim, adding that he believes the new model can have an immediate effect on medicine, by leading to both new and improved drugs.

“Brian has made this technology open source,” he says. “That means that with just the push of a button, this is something that can help any antibody engineers—and it can help them today.”

CZ Biohub San Francisco’s Remoscope shows promise in clinical study

Learn More

A rock-climbing scientist brings his exploratory spirit to science and medicine

Learn More

Three generations of scientists visit CZ Biohub SF to learn metagenomic sequencing techniques and analysis

Learn More

Stay up-to-date on the latest news, publications, competitions, and stories from CZ Biohub.

Marketing cookies are required to access this form.